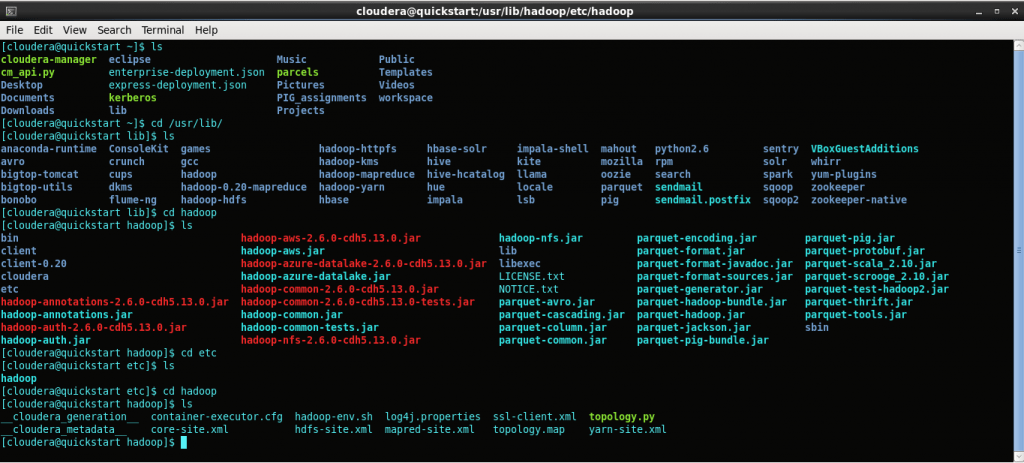

First we will go through list of configuration files and their properties.

below are commands to check all configurations

$ /usr/lib/hadoop/etc/hadoop

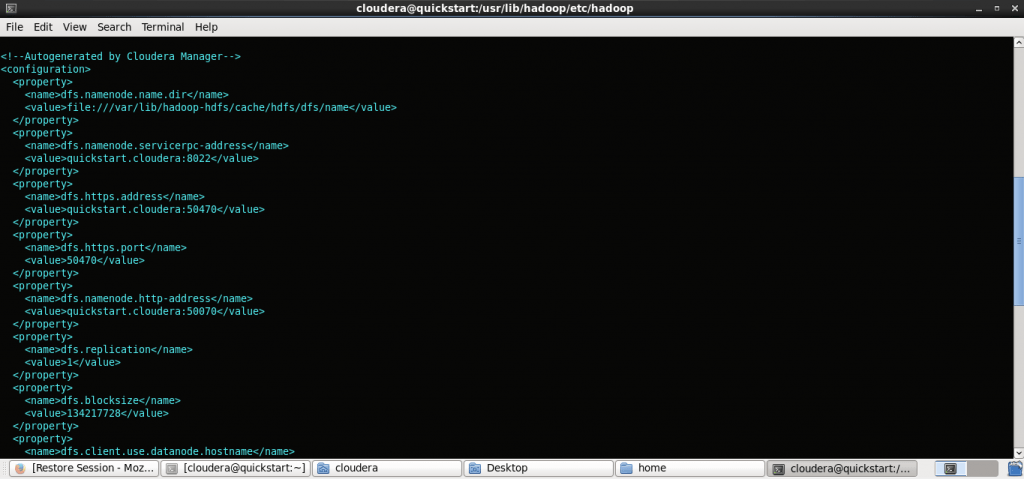

These files have special properties associated that can be configured by hadoop administrators. These prperties are enclosed with <property> </property> tags.

Suppose I want to add abc.txt file of size 50mb inside hdfs then according to standard configuration parameters will occupy 1 data block as each datablock size is 128mb. Standard replication factor is 3. To check all these parameters we will have to look for hdfs-site.xml

hit below command to alter these parameters

$ cat hdfs-site.xml

The replication factor is 3 here as I have hadoop cluster with 11 nodes. Suppose you have 1 node hadoop cluster then there is no point in keeping three different replications on single node.

As you see there are 134217728 bytes of datablocks. (128 mb*1024 *1024) in dfs.blocksize. These special parameters can be changed in these xml

To study more in details refer below links:

https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

https://hadoop.apache.org/docs/r2.8.0/hadoop-project-dist/hadoop-common/core-default.xml

Author Profile

- Passionate traveller,Reviewer of restaurants and bars,tech lover,everything about data processing,analyzing,SQL,PLSQL,pig,hive,zookeeper,mahout,kafka,neo4j

Latest Post by this Author

PLSQLApril 26, 2020How effectively we can use temporary tables in Oracle?

PLSQLApril 26, 2020How effectively we can use temporary tables in Oracle? Big DataAugust 15, 2019How to analyze hadoop cluster?

Big DataAugust 15, 2019How to analyze hadoop cluster? Big DataJuly 28, 2019How to setup Hadoop cluster using cloudera vm?

Big DataJuly 28, 2019How to setup Hadoop cluster using cloudera vm? Big DataMay 25, 2019How to configure parameters in Hadoop cluster?

Big DataMay 25, 2019How to configure parameters in Hadoop cluster?